Authors: Chen Wei*, Chi Zhang*, Jiachen Zou, Haotian Deng, Dietmar Heinke, Quanying Liu

*Chen Wei and Chi Zhang contributed equally to this work.

Date: October 2, 2024

Supervisor: Quanying Liu, Dietmar Heinke

Introduction

Motivation:

Human perception varies with ambiguous stimuli, and ANNs struggle to capture this variability. Understanding it is key to predicting individual perception in uncertain situations. This study explores whether generating images based on ANN perceptual boundaries can reveal and manipulate human perceptual variability.

Method:

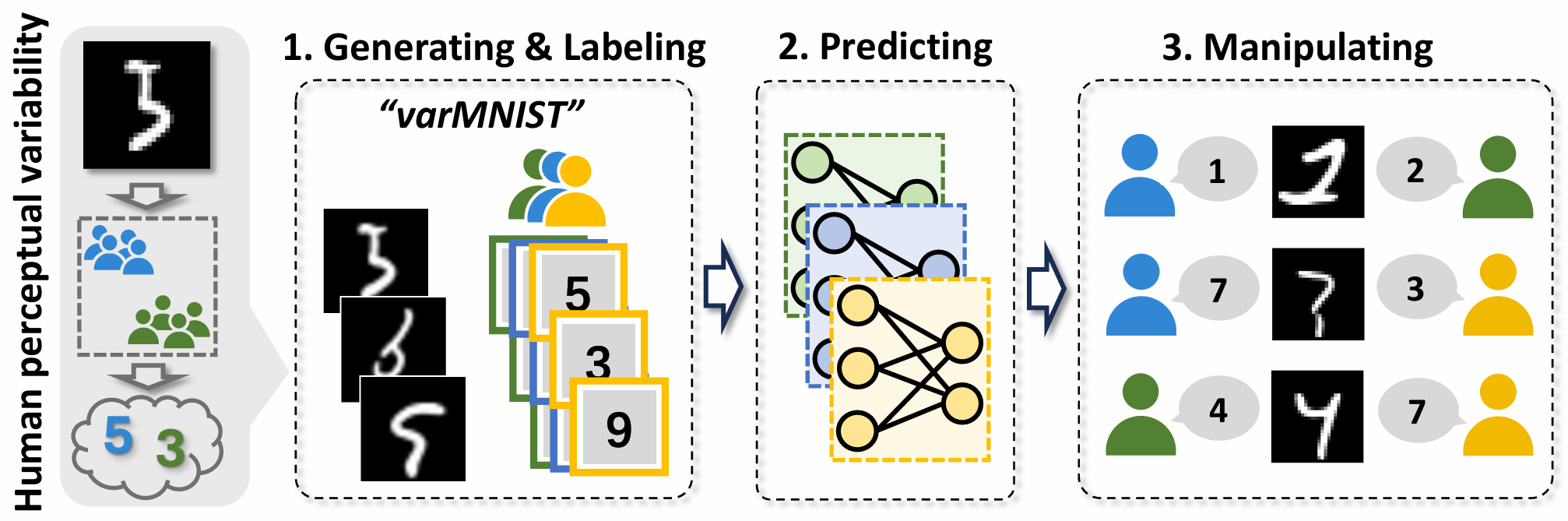

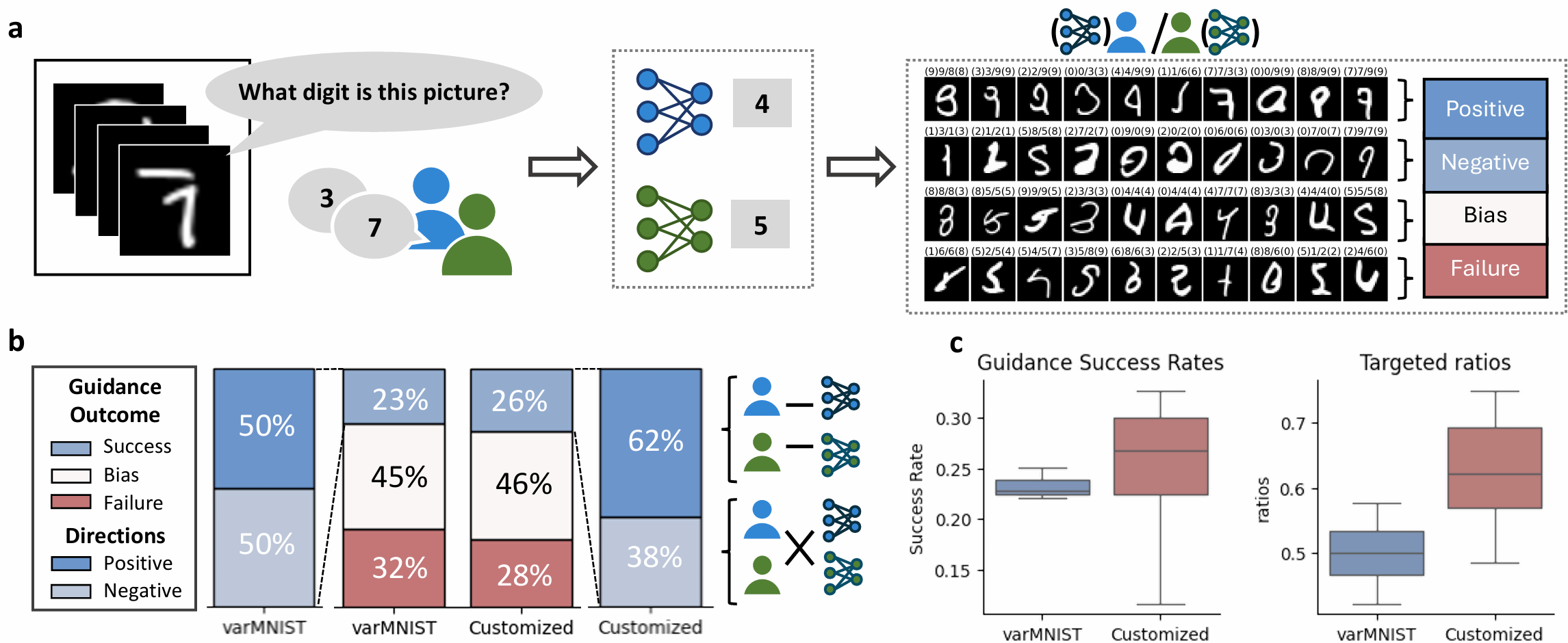

We present a paradigm for studying human perceptual variability through image generation: 1) Generating & labeling: Images sampled from the decision boundaries of ANNs are used in human behavioral experiments to construct a high-variability dataset, varMNIST. 2) Predicting: Human behavioral data are used to finetune models, aligning them with human perceptual variability at both the group level and individual level. 3) Manipulating: Individual finetuned models are leveraged as adversarial classifiers to generate new images that amplify perceptual differences between individuals.

Contribution:

- A novel generative approach to probe human perception.

- Aligning human and ANN perceptual variability.

- Revealing and manipulating individual decisions.

Collecting Human Perceptual Variability

Generating images by sampling from the perceptual boundary of ANNs:

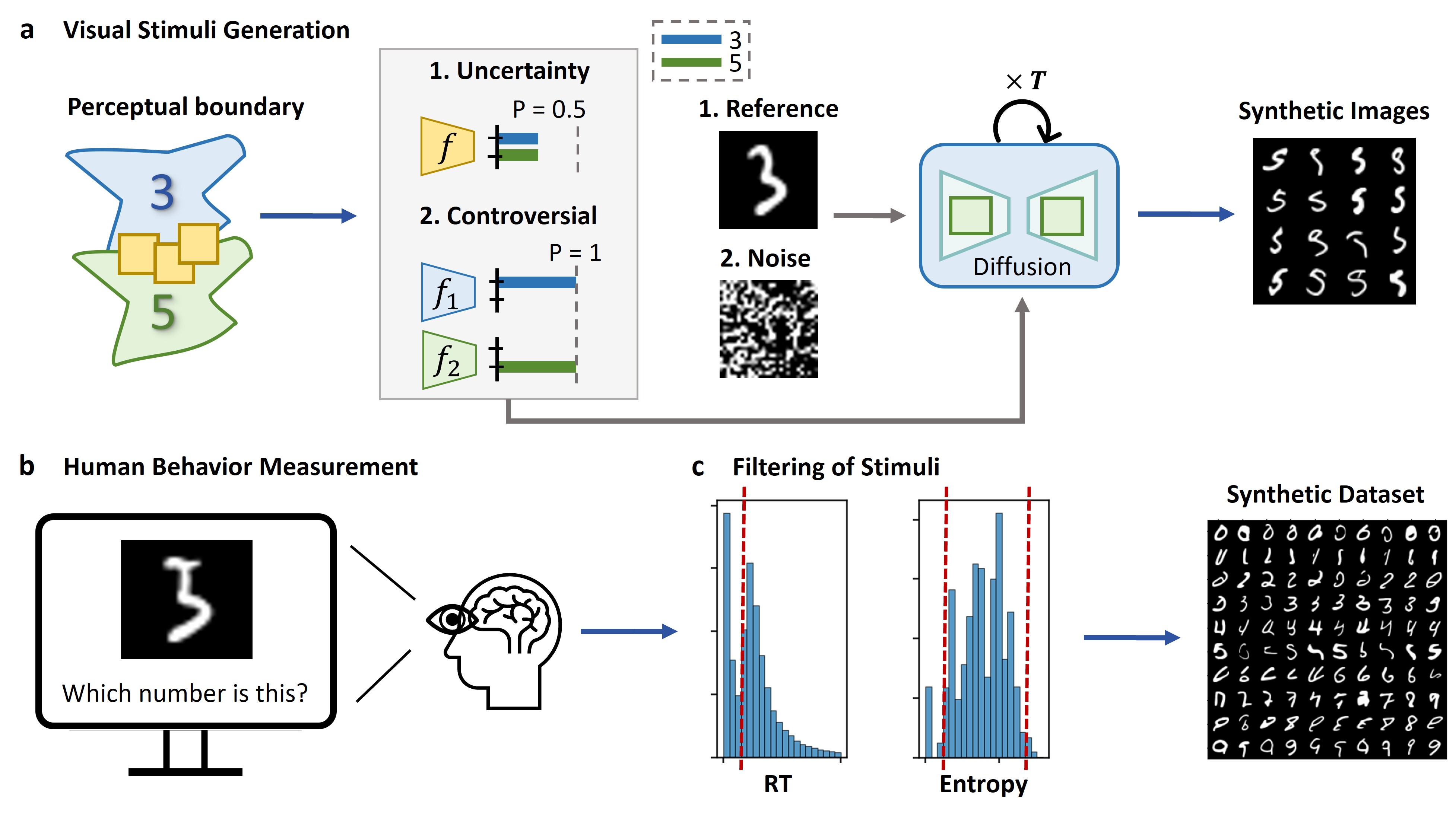

We sampled images along ANN decision boundaries to induce high perceptual variability using two methods:

- Uncertainty Guidance: Maximizes entropy in an ANN’s classification to generate ambiguous images near its decision boundary.

- Controversial Guidance: Maximizes the KL divergence between two ANN models, producing images that highlight differences in their classifications.

Improving generative quality by digit judgment surrogate:

We conducted a digit judgment experiment, using participants’ judgments on whether generated images looked like handwritten digits to train a digit judgment proxy, which is then used to guide image generation.

Measuring Human Perceptual Variability by Recognition Experiment:

We used the images synthesized using the two guidance methods, ANN perceptual boundary sampling and digit judgment surrogate, for the digit recognition human experiment. A total of 19,952 images were used, with 123,000 trials conducted across 246 participants, resulting in the high perceptual variability dataset varMNIST.

Predicting Human Perceptual Variability

Fine-tuning Models for Human Alignment:

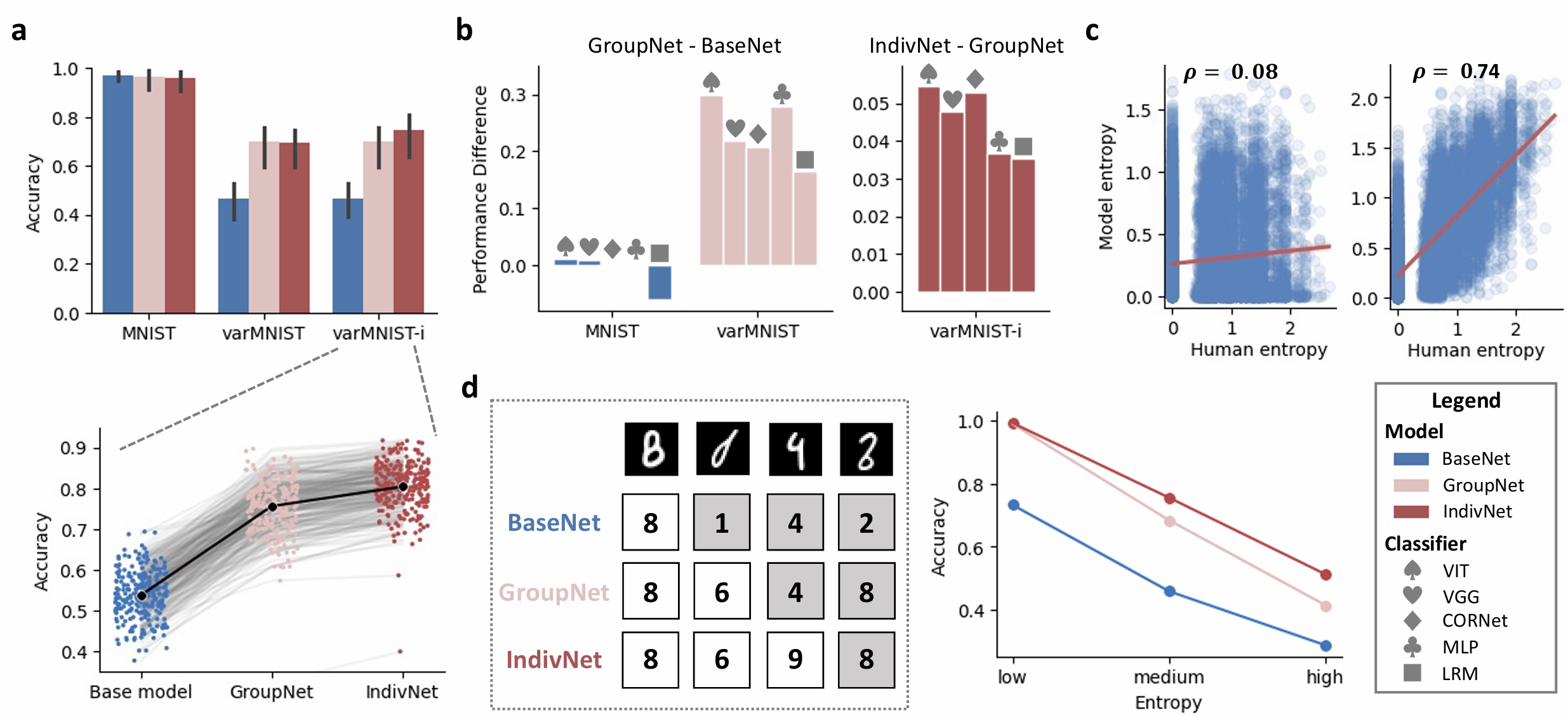

- We used a mixed training approach to fine-tune models, combining MNIST and varMNIST datasets for group training and varMNIST-i, varMNIST, and MNIST for individual training. Fine-tuning improved model accuracy, with individual fine-tuning providing additional gains.

Different Classifiers Show Varying Fine-tuning Performance:

- Fine-tuning enhanced the performance of most classifiers, with VIT and MLP showing the largest improvements, while LRM exhibited the weakest adaptability.

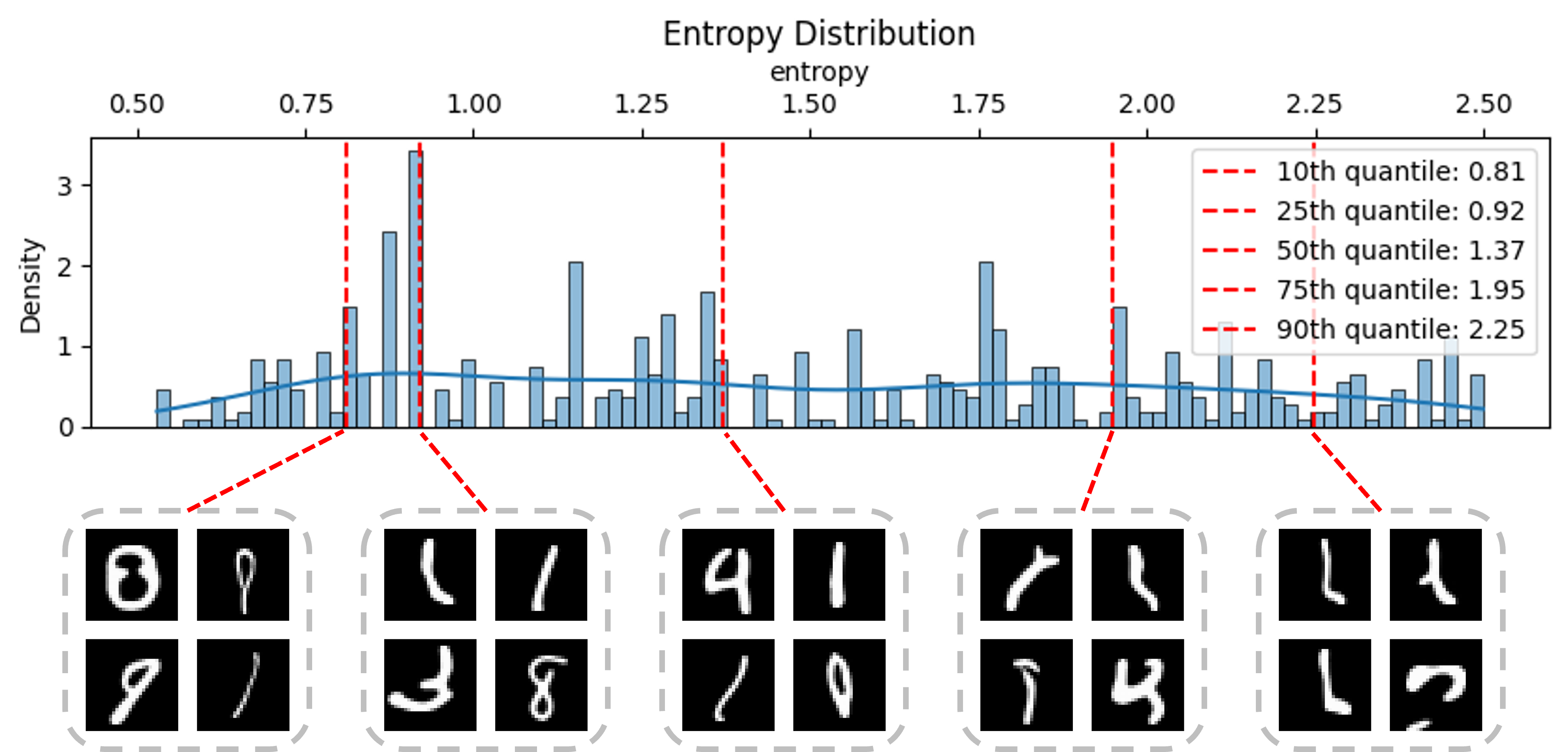

Performance across Images with Varying Entropy Levels:

- Fine-tuned models outperformed the base model across all task difficulties, with individual fine-tuning providing the most benefit for more challenging (high-entropy) samples, highlighting the model’s improved adaptability to complex stimuli.

Manipulating human perceptual variablity

Experimental Paradigm:

- We tested if fine-tuned individual models could amplify perceptual differences and guide decisions. In the first round, 500 varMNIST samples were used to fine-tune individual models. In the second round, controversial stimuli generated from these models were presented to pairs of participants to evaluate if they could guide decision directions.

Manipulation Results:

Guidance Outcome (success, bias, or failure): Individual fine-tuning improved the success rate by 3%, reduced failures by 4%, and increased bias by 1% compared to varMNIST, even with a small sample size.

Targeted Ratio (the proportion of trials where participants’ choices aligned with the guidance direction): The targeted ratio improved by 12%, showing that fine-tuning enhanced the model’s ability to guide decisions more effectively and precisely.

Discussion

Key Findings:

- Eliciting Human Perceptual Variability Through Generated Samples.

- Achieving Individual Alignment by Predicting Human Perceptual Variability.

- Revealing and Manipulating Perceptual Variability Using Individual Models.

Future Works:

- Expanding the dataset to natural images and diverse participants will better capture human variability.

- Further improvements are needed in AI-human alignment using optimal experimental design.

This paper has been published in ICML 2025.