Authors: Dongyang Li*, Chen Wei*, Shiying Li, Jiachen Zou, Quanying Liu

*Dongyang Li and Chen Wei contributed equally to this work.

Date: March 14, 2024

Supervisor: Quanying Liu

Introduction

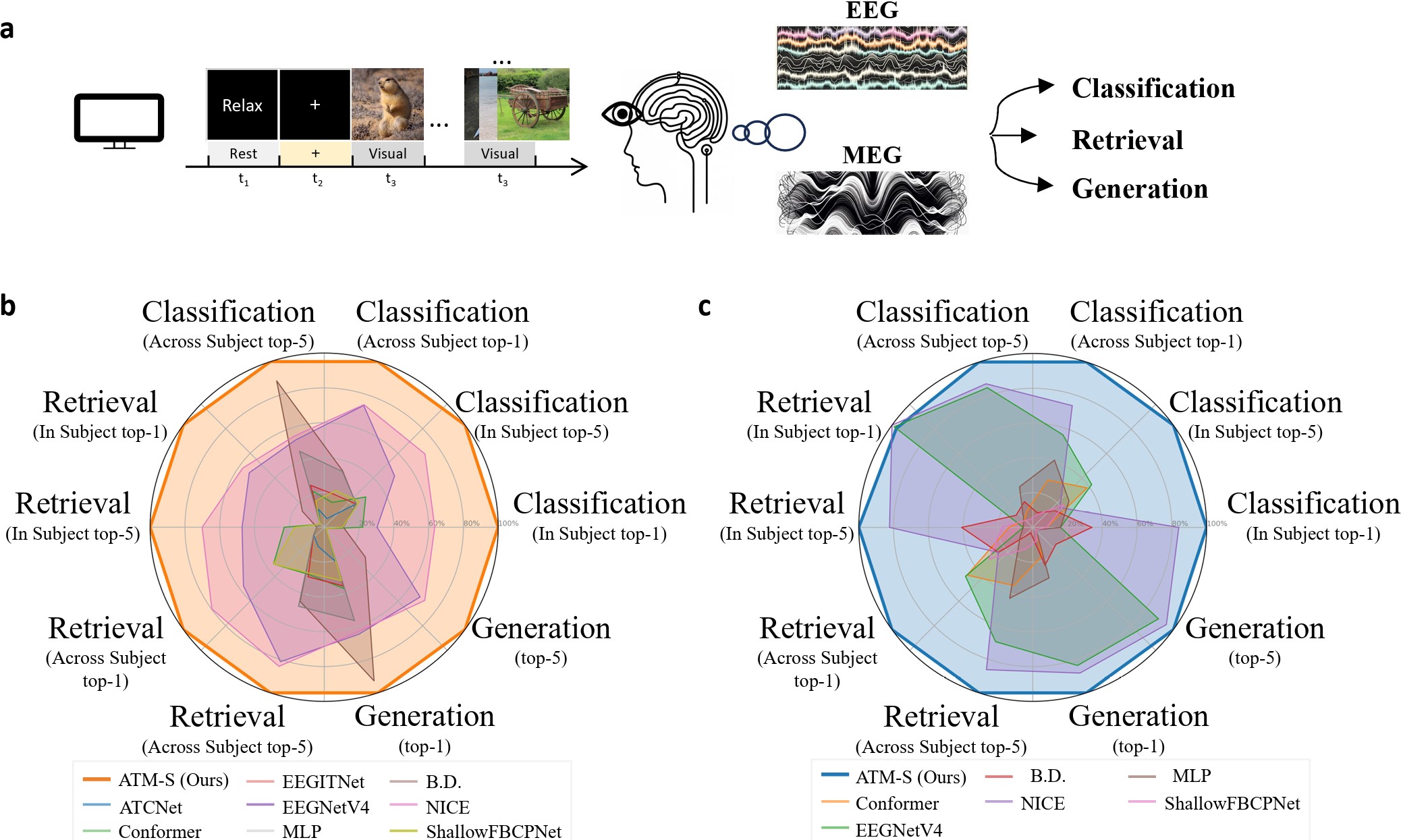

A primary issue in BCI technology is the accurate decoding and reconstruction of the visual world as perceived by humans through brain signals. Traditional methods like fMRI, while effective, are hampered by high costs, low portability, and suboptimal temporal resolution, limiting their practicality for real-world BCI applications. Despite the potential of EEG in this domain, its utility has been underexplored due to its inherent limitations, such as low signal-to-noise ratio and spatial resolution.

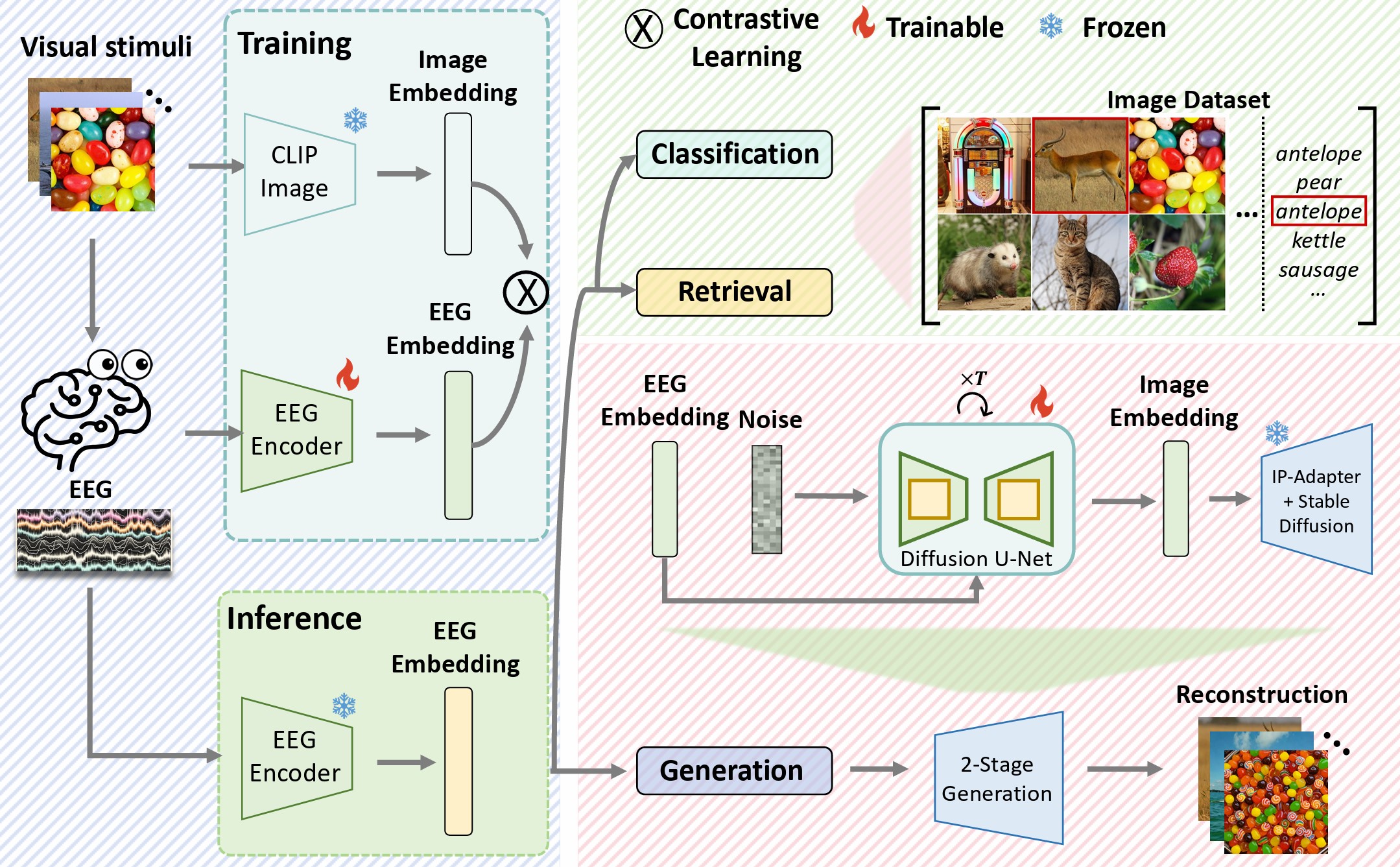

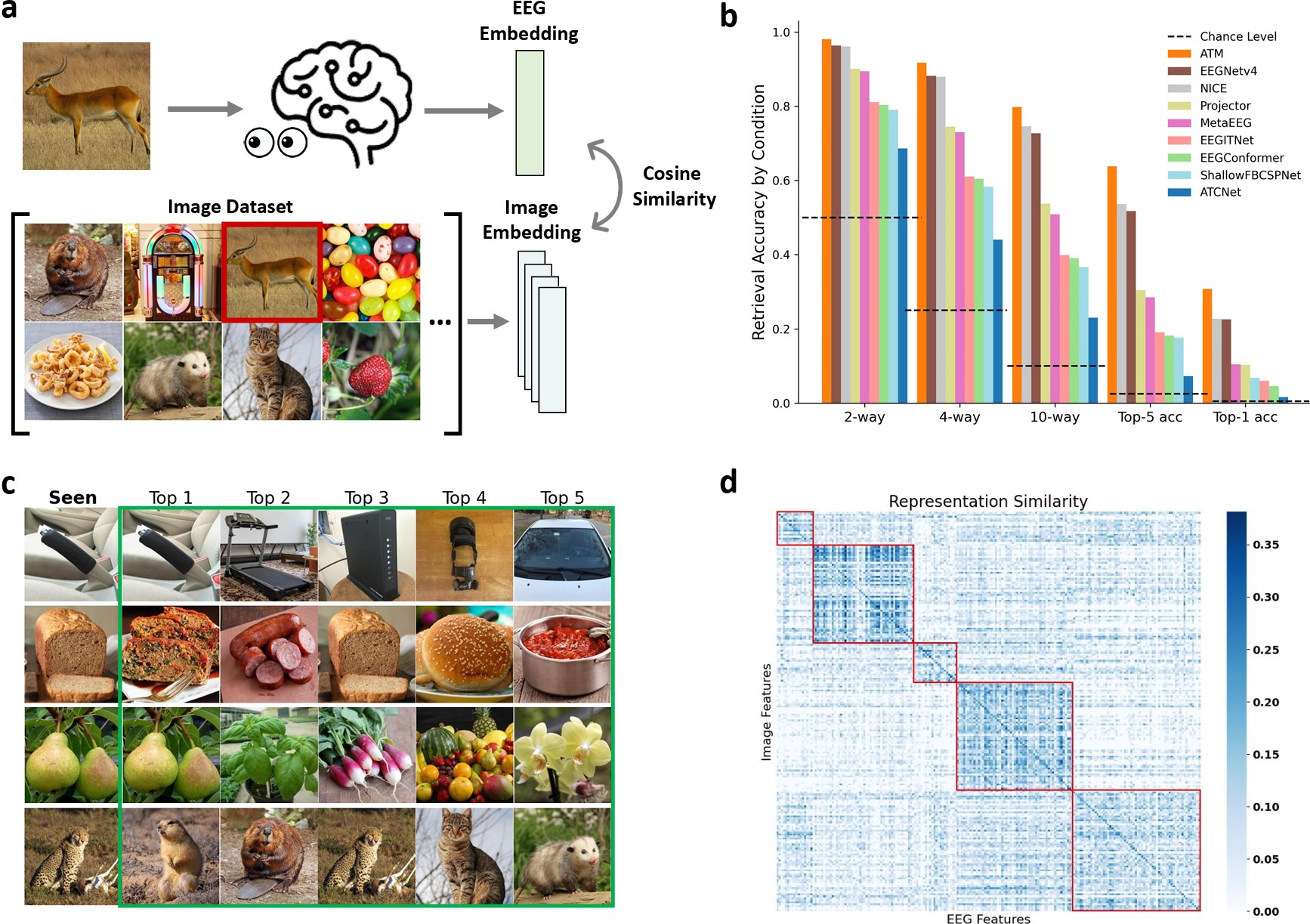

Our project introduces an innovative EEG-based visual reconstruction framework, leveraging a novel EEG encoder, the Adaptive Thinking Mapper (ATM), and a two-stage image generation process. This framework significantly enhances the performance of EEG in image classification, retrieval, and reconstruction tasks:

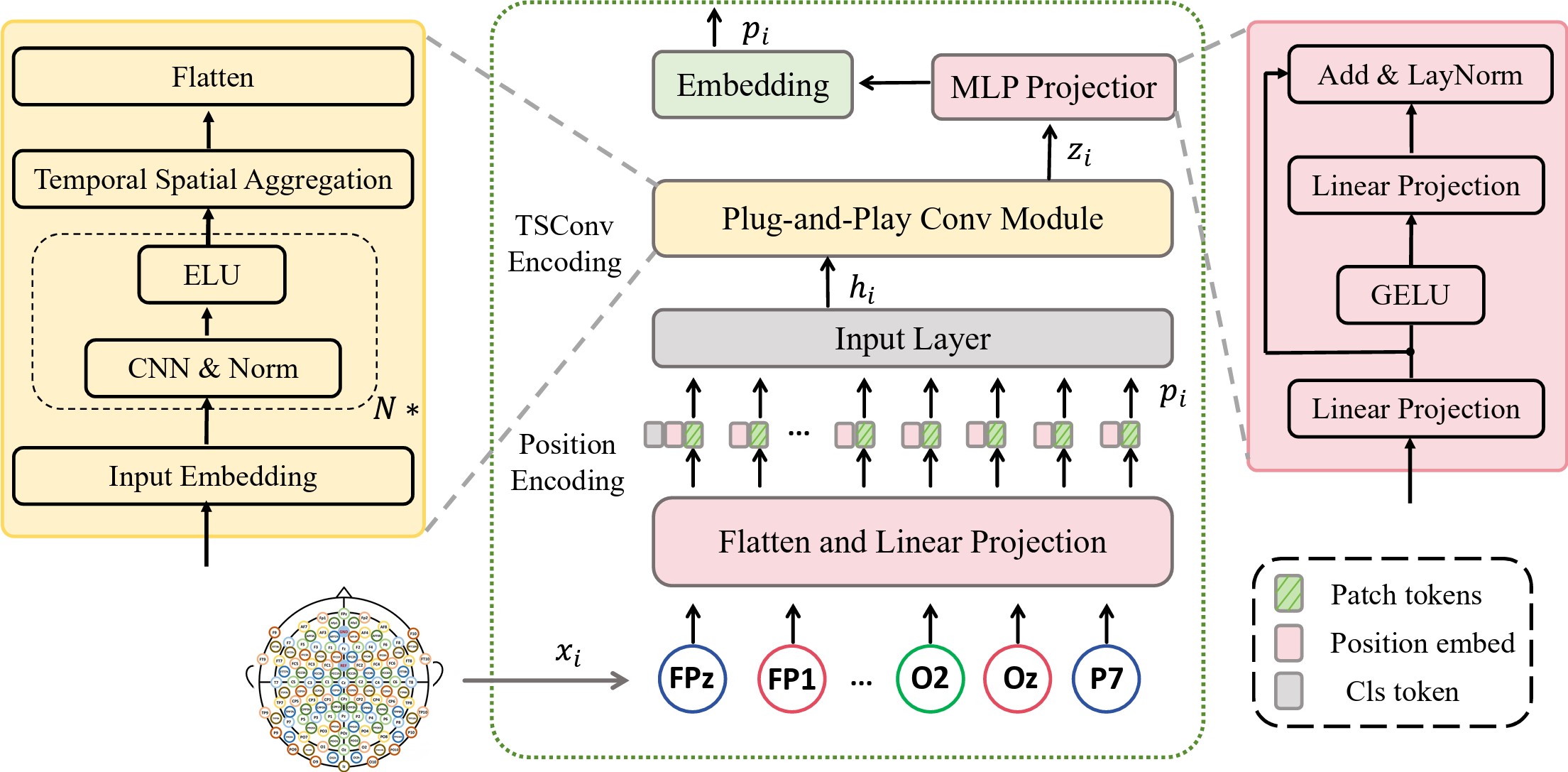

- Adaptive Thinking Mapper (ATM): A state-of-the-art EEG encoder that incorporates attention modules and spatiotemporal convolution to efficiently process and extract meaningful features from EEG and MEG data.

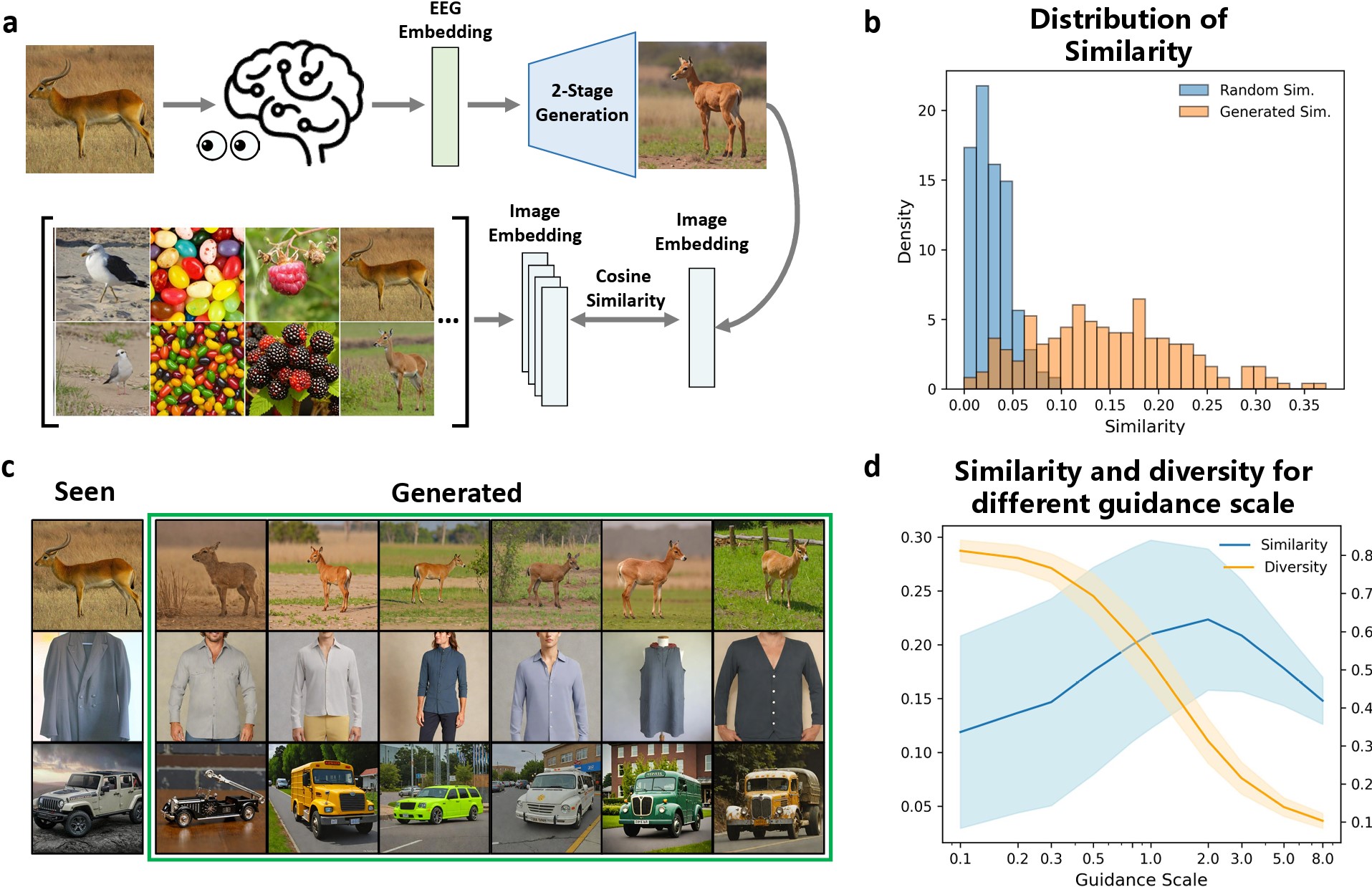

- Two-Stage Image Generation: Our method first transforms EEG features into image priors using a diffusion model, then employs an advanced Stable Diffusion technique to reconstruct visual stimuli. This approach not only achieves superior image generation quality but also demonstrates the framework’s adaptability to different brain signal modalities.

Method

EEG Embedding with Adaptive Thinking Mapper (ATM):

- Transformer and Spatial-Temporal Convolution: Utilizing self-attention, ATM integrates positional encoding with input data embeddings. It then employs a spatiotemporal convolution module to effectively capture EEG signal patterns. The encoder outputs EEG embeddings, which are aligned with image embeddings for subsequent tasks.

EEG-Guided Two-Stage Image Generation:

- Stage I - Prior Diffusion: A diffusion model conditioned on EEG embeddings generates CLIP image embeddings.

- Stage II - Image Reconstruction: Utilizing models like SDXL and IP-Adapter, we convert the generated CLIP embeddings into final images.

Results

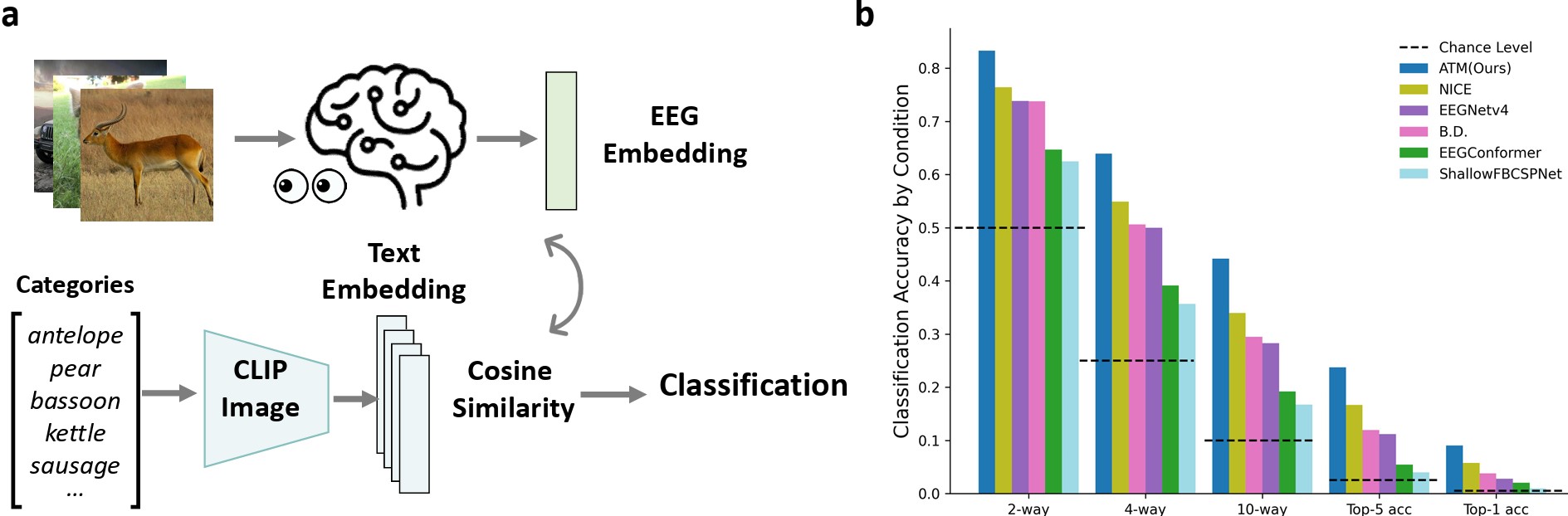

EEG Decoding performance

Image Generation performance

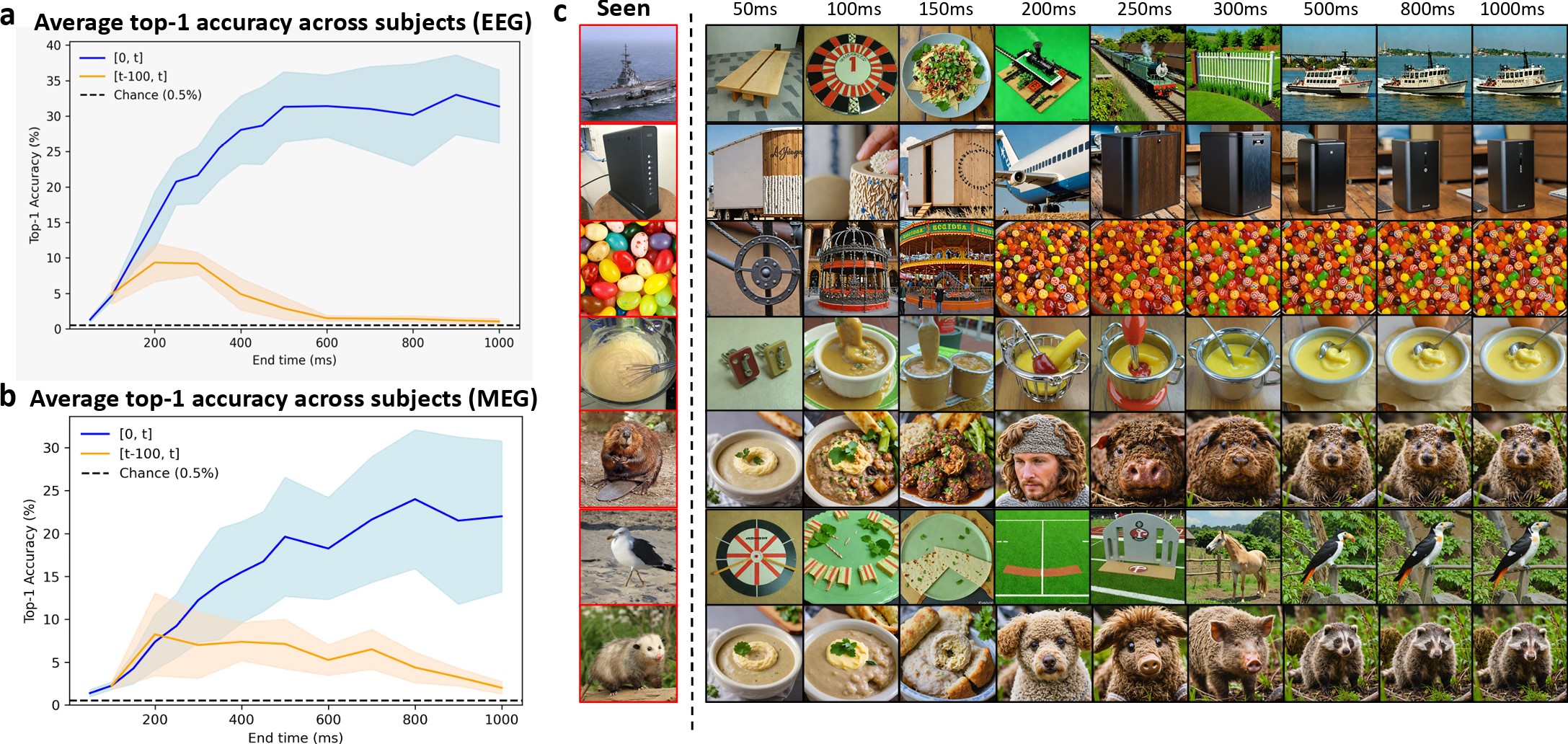

Temporal analysis

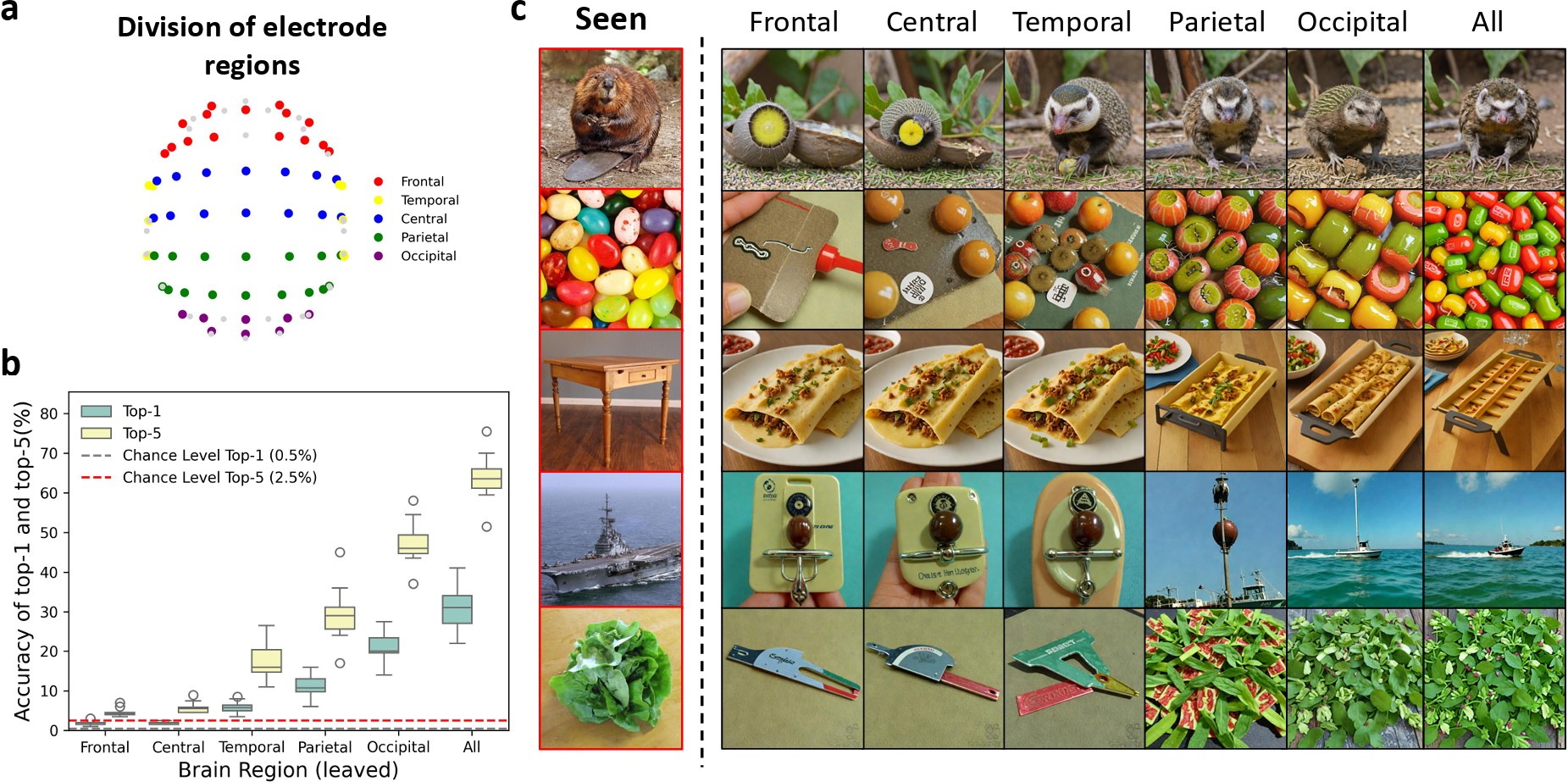

Spatial analysis

Conclusion

Technical Impact

- EEG Encoder (ATM): The Adaptive Thinking Mapper (ATM) represents a significant leap in EEG/MEG feature extraction, demonstrating superior performance across multiple visual decoding tasks.

- Two-Stage Image Generation: Our innovative approach to EEG-guided image generation closes the gap with fMRI, showcasing the potential of EEG data in high-fidelity visual reconstruction.

Neuroscience Insights

- Temporal Dynamics: Our analysis highlights the critical time windows for visual information processing in the brain, with key differences noted between EEG and MEG data.

- Spatial Encoding: We pinpointed the brain regions predominantly involved in encoding visual information, offering a nuanced understanding of neural processing mechanisms.

For more detailed information, see the full paper here: EEG Decoding and Reconstruction Paper.

GitHub Repository: https://github.com/ncclab-sustech/EEG_Image_decode

This paper has been published in NeurIPS 2024.