Authors: Chen Wei*, Jiachen Zou*, Dietmar Heinke, Quanying Liu

*Chen Wei and Jiachen Zou contributed equally to this work.

Date: May 20, 2024

Supervisor: Quanying Liu, Dietmar Heinke

Introduction

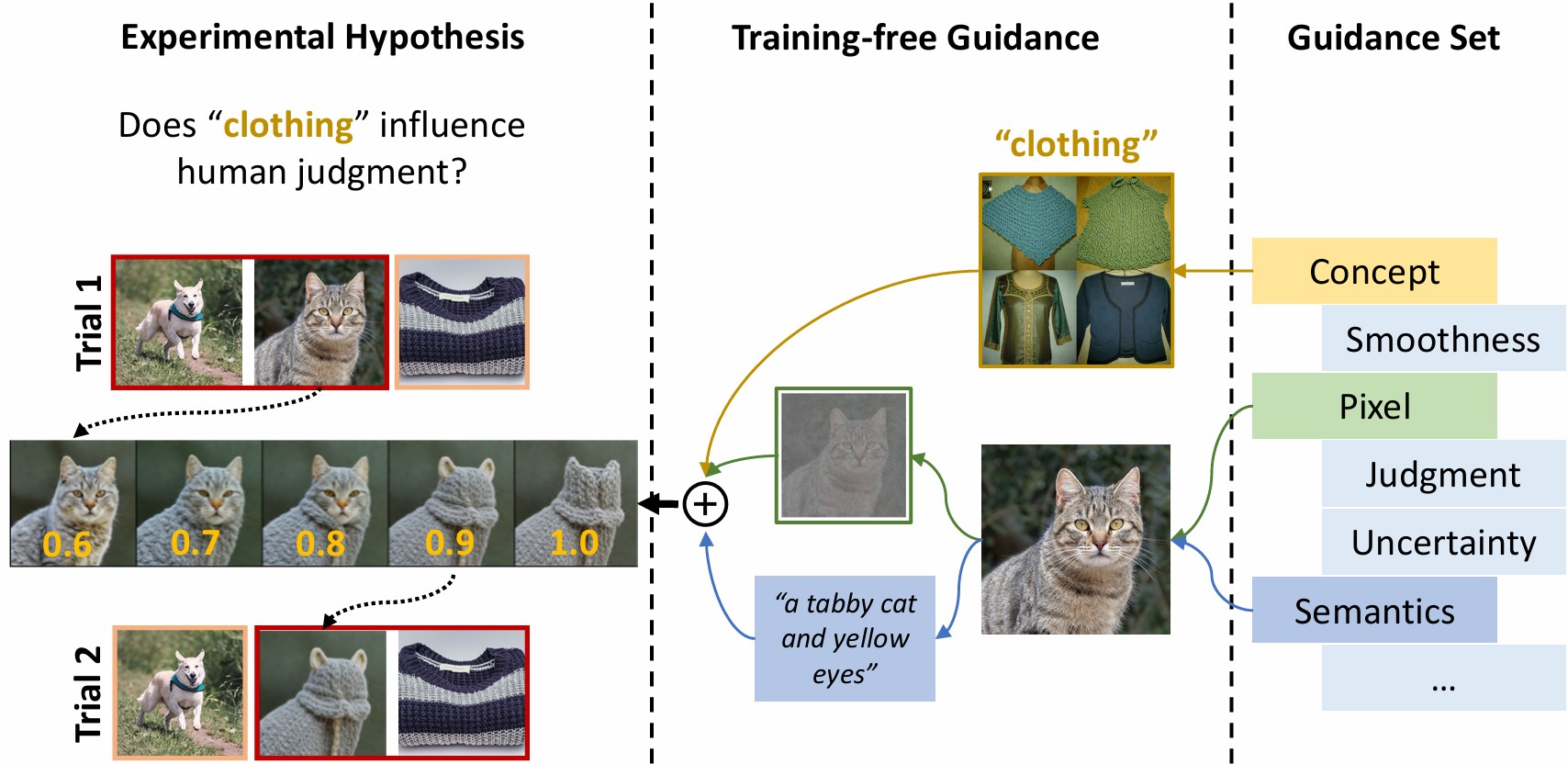

CoCoG Framework:

The concept-based controllable generation (CoCoG) framework uses concept embeddings to generate visual stimuli that can influence decision-making behaviors. Despite its benefits, CoCoG lacked flexibility in editing and guiding visual stimuli based on varying concept dimensions without compromising other image features.

Introducing CoCoG-2:

To overcome these limitations, we introduce the CoCoG-2 framework. CoCoG-2 employs a training-free guidance algorithm, enhancing the flexibility and accuracy in manipulating concept representations. This approach allows for more precise control and integration of experimental conditions.

Key Contributions:

- Enhanced Framework: We propose a general framework for designing experimental stimuli based on human concept representations and integrating experimental conditions through training-free guidance.

- Guidance Strategies: We have verified a variety of potential guidance strategies for guiding the generation of visual stimuli, controlling concepts, behaviors, and other image features.

- Validation of Hypotheses: Our results demonstrate that visual stimuli generated by combining different guidance strategies can validate a variety of experimental hypotheses and enrich our tools for exploring concept representation.

Method

Training-free guidance:

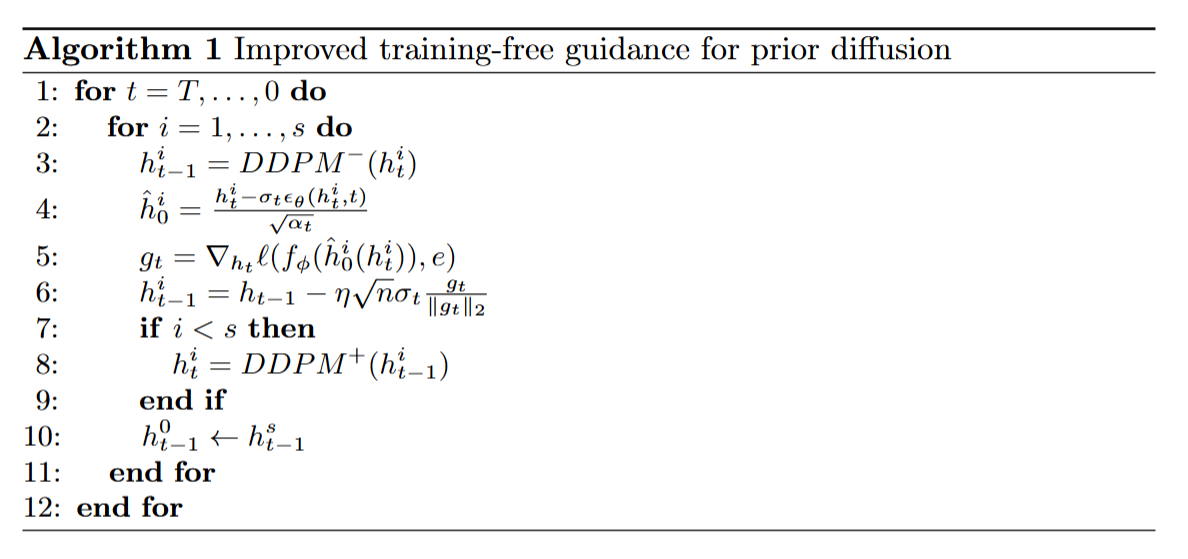

In CoCoG-2, we simplified the distribution to p(x, h, e) = p(e)p(h|e)p(x|h), where e includes essential conditions such as concepts and similarity judgments. In CoCoG-2 concept decoder, we continue using a two-stage process, starting with p(h|e) modeled as Prior Diffusion and then p(x|h) using CLIP guidance. This approach enhances the model’s flexibility by introducing training-free guidance in the Prior Diffusion phase, allowing for effective control over the generation of visual stimuli by selecting appropriate conditions e.

In order to ensure the stability and effectiveness of training-free guidance, we use two technologies, Adaptive gradient scheduling and Resampling trick, to enhance training-free guidance. The algorithm is shown in Figure 1.

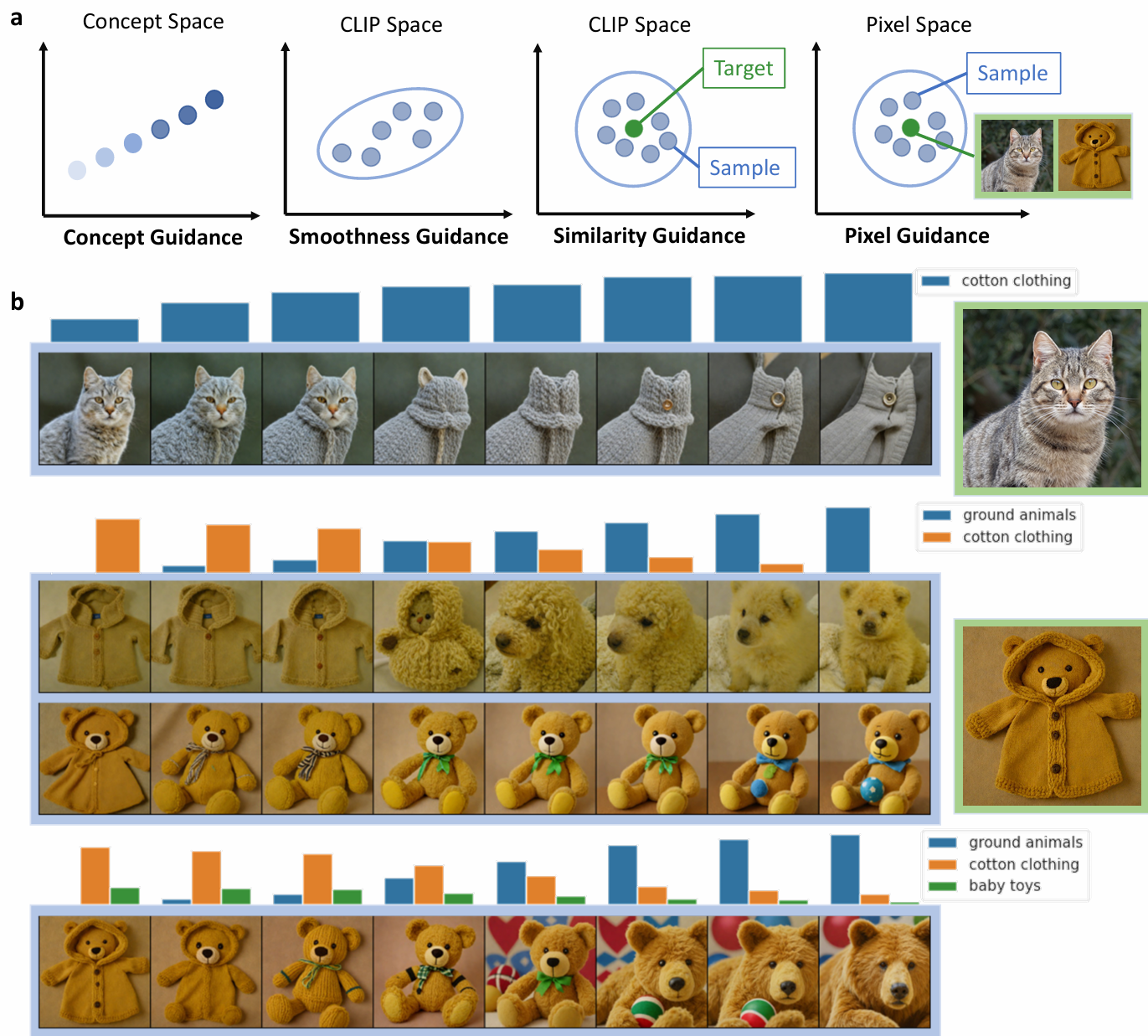

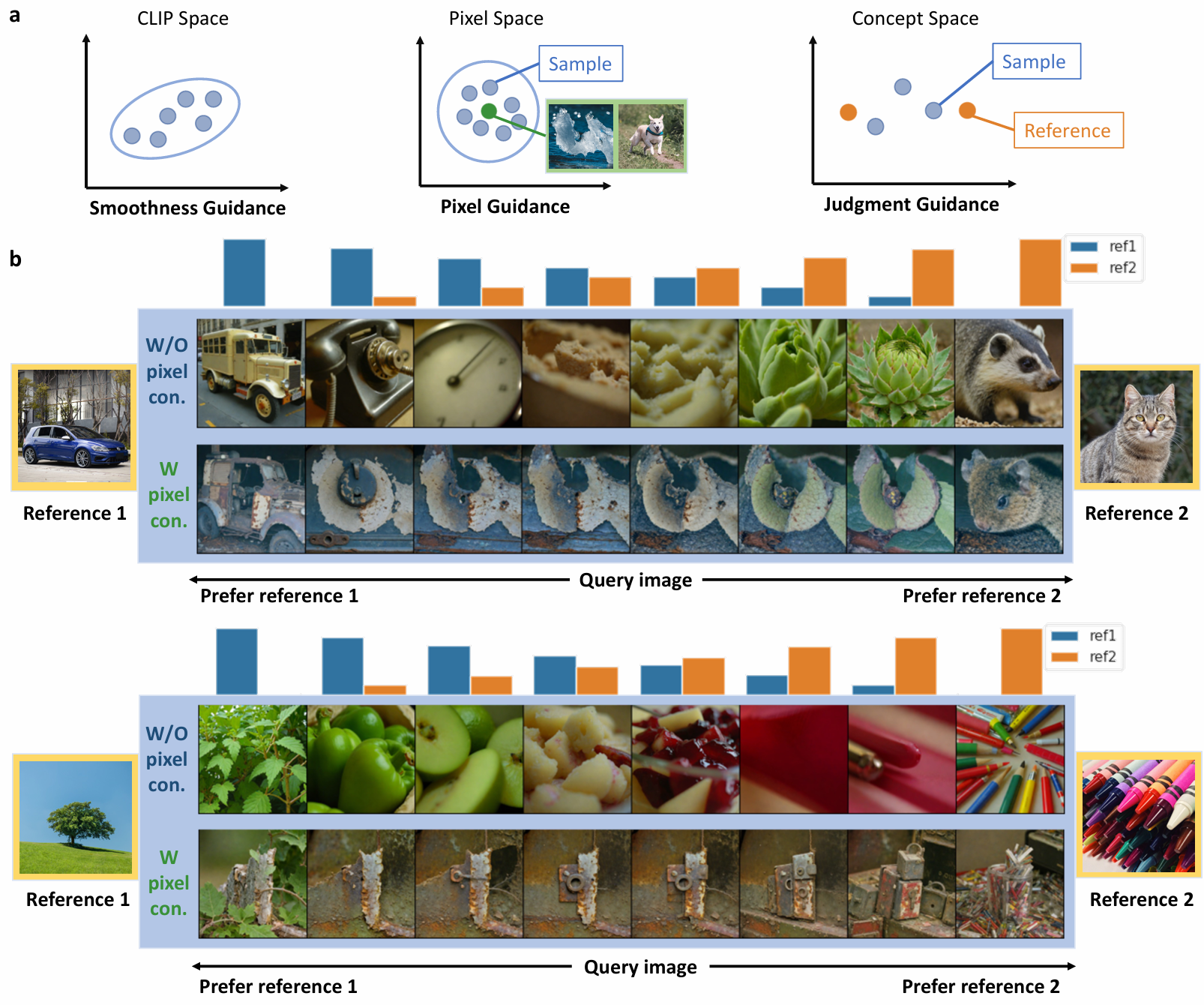

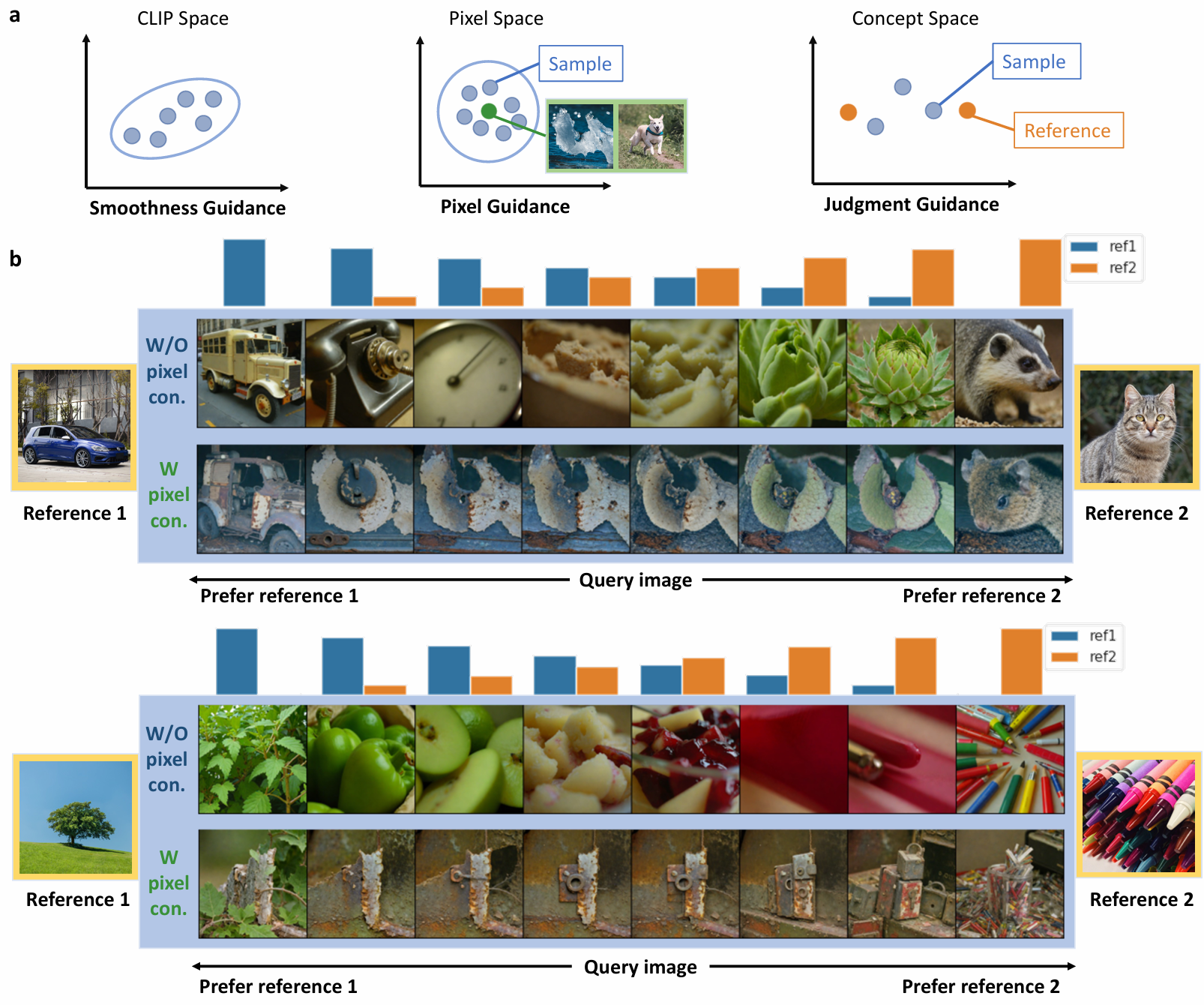

Guidance set:

To construct a comprehensive loss function, we aim to fulfill multiple conditions simultaneously in the generative process. We define seven types of guidance—concept, smoothness, semantics, judgment, uncertainty, and pixel guidance—to ensure that the visual stimuli meet our specified requirements.

CoCoG-2 for versatile experiment design

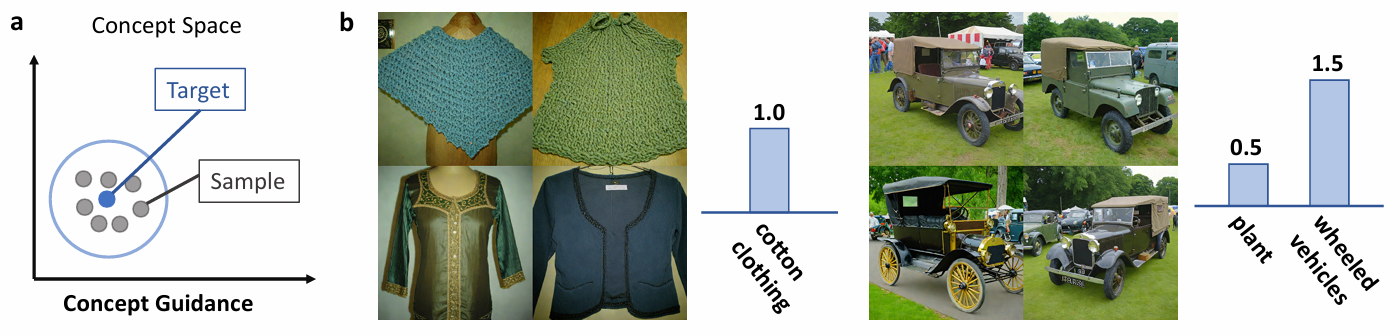

Diverse generation based on concept:

- CoCoG-2 can generate images that are well-aligned with the target concepts, and these images exhibit good diversity in low-level features.

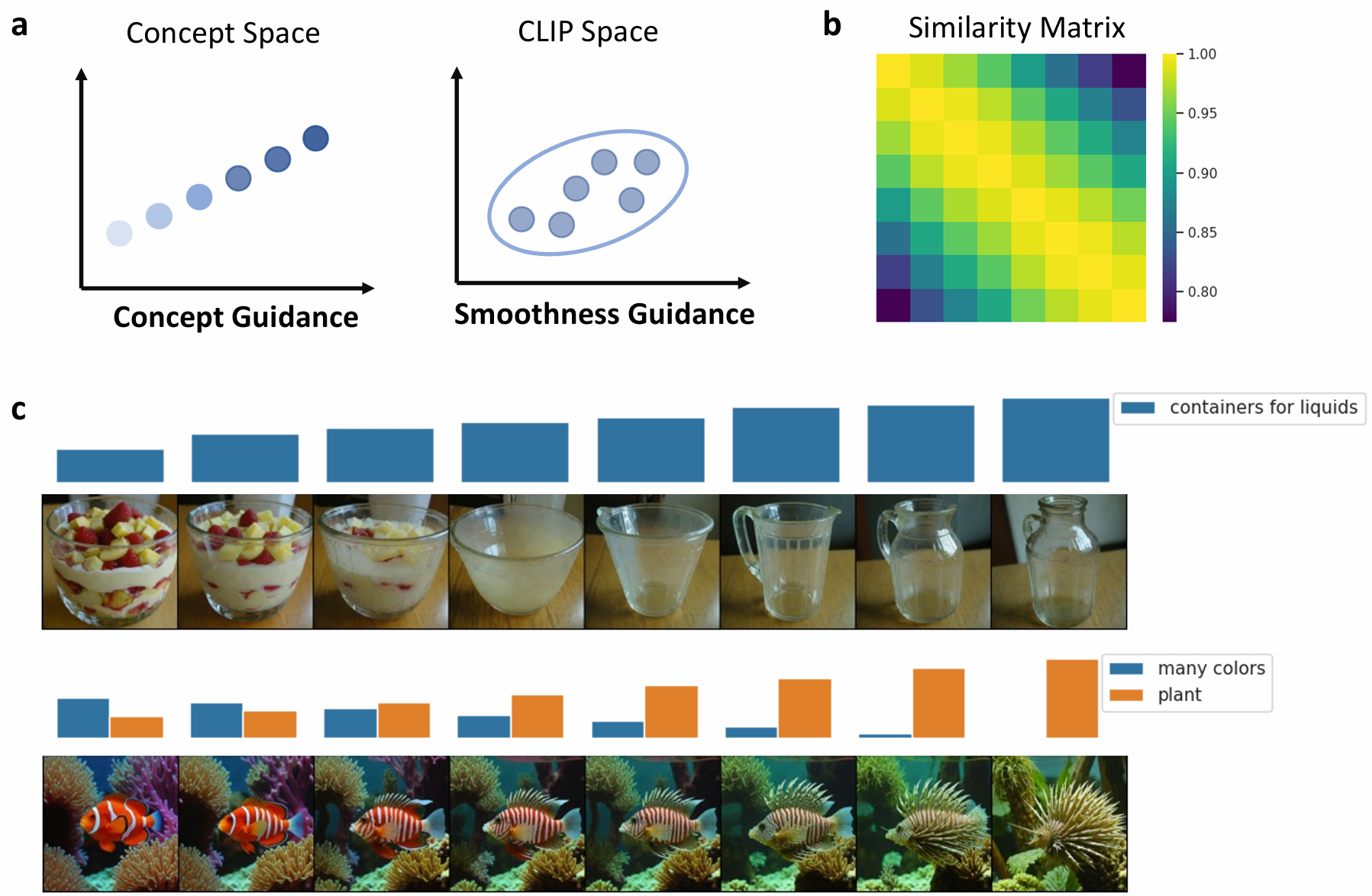

Smooth changes based on concepts:

- CoCoG-2 can modify the activation values of target concepts to generate image trials while maintaining smooth low-level features.

Concept edit of given image:

- CoCoG-2 can start with an “original image” and edit target concepts to generate image trials that are similar to the original image and vary the concepts according to given values.

Multi-path control of similarity judgment:

- CoCoG-2 can directly generate image trials guided by experimental results, while maintaining consistency with the guided image (if available) in shape, color, and other low-level features.

Optimal design for individual preference:

- CoCoG-2 can be used to design and generate visual stimuli that maximize information gain, thereby substantially reducing the number of experiments required in cognitive research.

Conclusion

The CoCoG-2 framework represents a significant advancement in the field of controllable visual object generation. It effectively combines concept representations with behavioral insights to guide the image generation process, addressing the limitations of the original CoCoG framework and enhancing flexibility and efficiency.

- Integration of Concepts and Behavior: CoCoG-2 integrates concept representations with behavioral outcomes, improving the relevance and utility of generated visual stimuli in cognitive research.

- Enhanced Flexibility and Efficiency: By employing a versatile experiment designer and meticulously designed guidance strategies, CoCoG-2 achieves greater flexibility and efficiency in visual stimulus generation.

For more detailed information, see the full paper here: CoCoG-2 Paper.

GitHub Repository: https://github.com/ncclab-sustech/CoCoG-2

This paper has been published in HBAI 2024.